In retrospect: AI4Life Public Challenges

AI4Life’s mission isn’t just to assist individual projects , it also seeks to benchmark and push the boundaries of AI methods for bioimage analysis. Over three years, the project has organised three public challenges, inviting the computational community to compete on core imaging tasks under shared datasets, metrics, and conditions.

Overview & evolution

First Challenge: unsupervised denoising

AI4Life launched the inaugural challenge focusing on unsupervised denoising of microscopy images. Rather than relying on paired “noisy/clean” image sets, participants were invited to apply algorithms that learn denoising from noisy data alone.

This setup reflects a practical constraint in microscopy: obtaining “clean” reference images is often difficult or impossible. The goal was to reduce noise while preserving delicate image features like edges, textures, and fine detail.

Four datasets were used, with two types of noise (structured and unstructured) represented. https://ai4life-mdc24.grand-challenge.org/ai4life-mdc24/

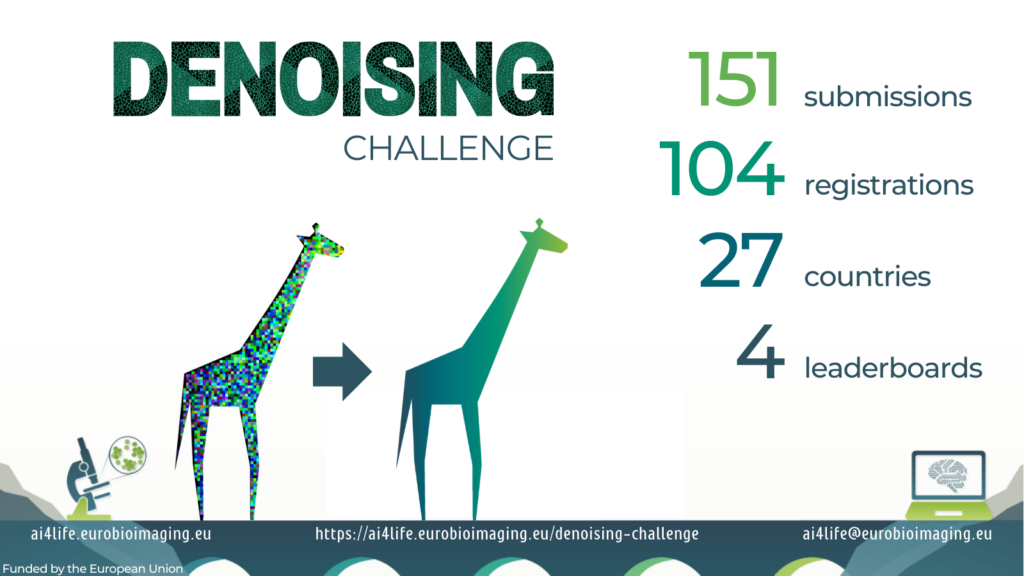

The 2024 AI4Life Denoising Challenge drew 104 registrants from 27 countries, resulting in 151 submissions across four leaderboards (each corresponding to a dataset/noise type).

Top results included combinations of algorithms like COSDD and N2V, which delivered strong performance across modules.

Find out more: https://ai4life.eurobioimaging.eu/ai4life-denoising-challenge-2024-results/

Second & Third Challenges: transitioning to supervised denoising

Following the insights from 2024, the 2025 challenge shifts focus to supervised denoising, now combining noisy and clean images to train models. The change allows for more precise performance evaluation and potentially better denoising when ground truth is available. The 2025 edition moved to include supervised denoising (paired noisy/clean) and a specialised calcium-imaging track.

The MDC25 and CIDC25 result pages (hosted on Grand-Challenge) hold the detailed leaderboards and entries; the CIDC25 platform remains accessible for benchmarking and late submissions:

- Microscopy Supervised Denoising Challenge (MDC25): https://ai4life-mdc25.grand-challenge.org/

- Calcium Imaging Denoising Challenge (CIDC25): https://ai4life-cidc25.grand-challenge.org/

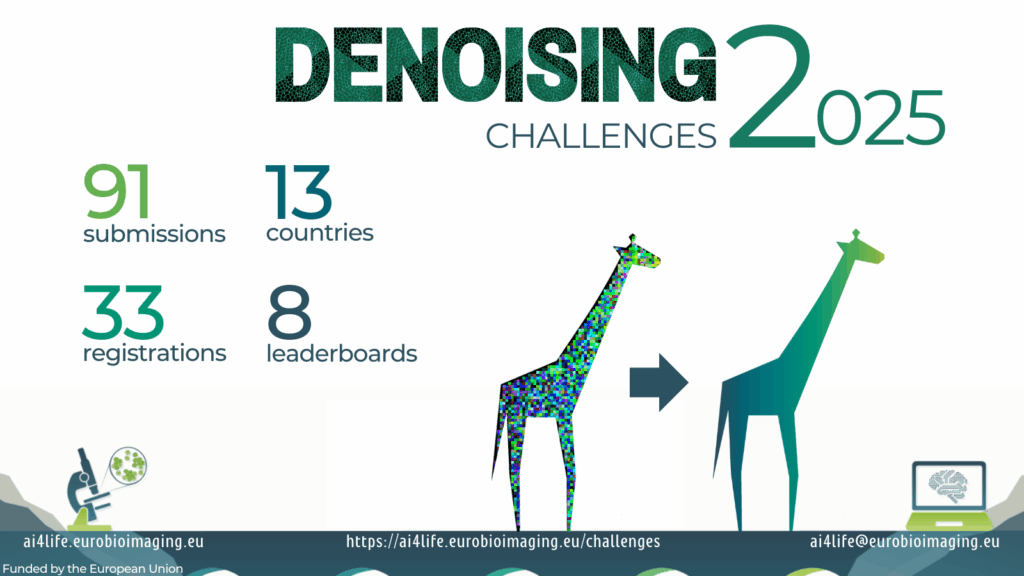

These two challenges together drew 91 submissions from 13 countries across eight leaderboards, with more details at:

https://ai4life.eurobioimaging.eu/ai4life-denoising-challenges-2025-results/

Why these challenges matter

- Benchmarking: Standardised challenges let us compare methods fairly, across diverse datasets and noise types.

- Broad community engagement: By opening up to anyone (not just project partners), AI4Life attracts fresh ideas and cross-pollination from adjacent fields.

From calls to conversations: reflections from our experts

Challenges are not just competitions; each involves substantial coordination, data curation, evaluation, and community outreach. To bring that human side forward, we recorded conversations with several experts who played key roles in designing, running, and evaluating these challenges.

Watch the full video and hear their stories: